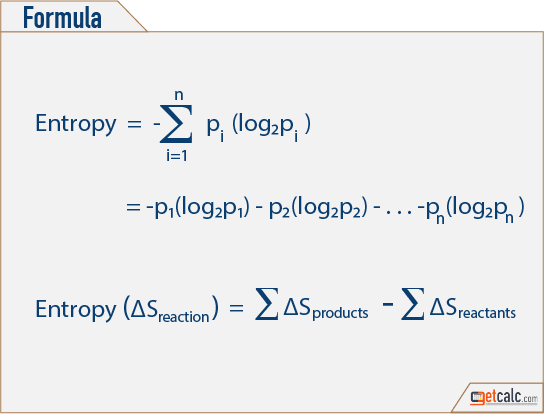

H ( X) x X p ( x) log 2 ( p ( x)) The units when using the log 2 is bits i.e., how many bits required to store the information present in the random variable X. The SI unit of Entropy is finally given as Joule/Kelvin, derived from. Entropy is the measure of randomness of a random variable. Also, it is expressed as S, and the following is its equation. Entropy can be defined in numerical terms as the ratio of the heat changes over the absolute temperature. The S.I unit of entropy is Joules per Kelvin. The Randomness of a system of particles is measured here and known as Entropy.

Unit of entropy license#

A copy of the license is included in the section entitled GNU Free Documentation License. Austrian physicist Ludwig Boltzmann explained entropy as the measure of the number of possible microscopic arrangements or states of individual atoms and. Entropy is a thermodynamically stable quantity directly linked to the body of particles.

Unit of entropy software#

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. CC BY-SA 3.0 Creative Commons Attribution-Share Alike 3.0 true true

The process of dissolution increases entropy because the solute particles become separated from one another when a solution is formed. The principle of entropy provides a fascinating insight into the course of random change in many daily phenomena. What unit is entropy Entropy increases when a substance is broken up into multiple parts.

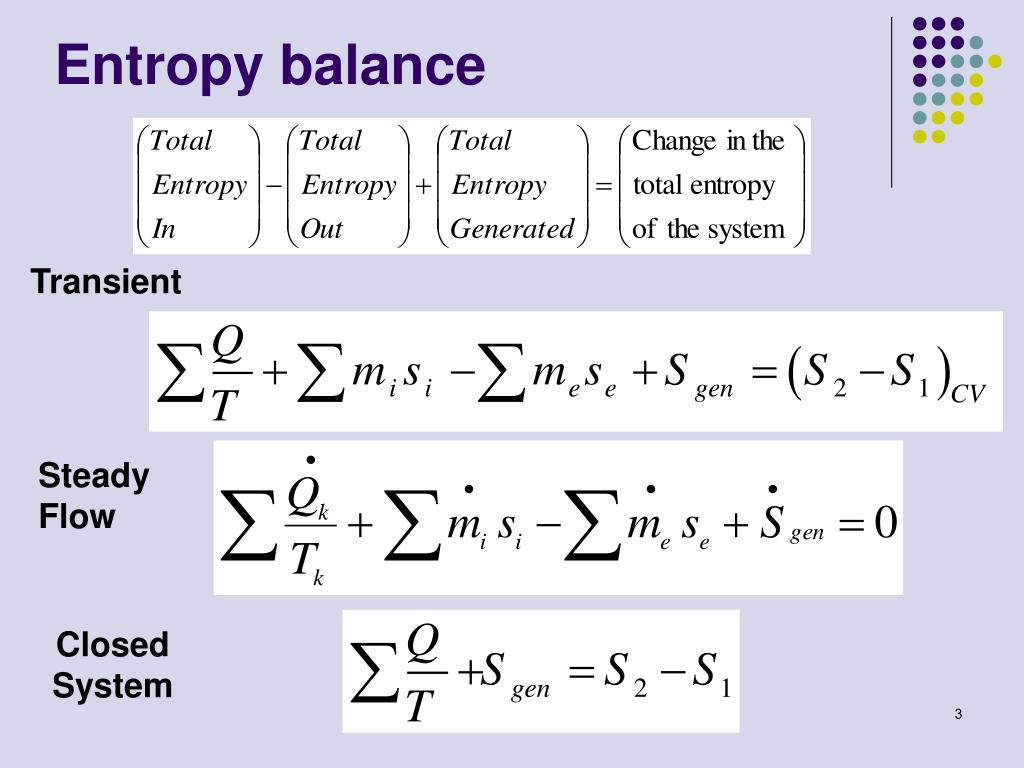

The amount of entropy is also a measure of the systems molecular randomness or disorder, as the work is generated from ordered molecular motion. to share – to copy, distribute and transmit the work Entropy measures the systems thermal energy per unit temperature.

0 kommentar(er)

0 kommentar(er)